Let's Build! A Simple Load Balancer with Golang

In one of the previous posts we introduced Eight Pillars of Fault-tolerant Systems and today we will discuss the Load Balancing.

Load balancing is a technique used in distributed systems to distribute network traffic across multiple servers. This method aims to optimize resource use, maximize throughput, minimize response time, and prevent overload of any single resource.

Load balancing not only helps ensure high availability and reliability by distributing the load amongst several servers, but it also allows the system to scale in response to an increased load.

Understanding Load Balancing Algorithms

There are several algorithms that load balancers can use to determine how to distribute the load among the available servers. Here are some of the most common ones:

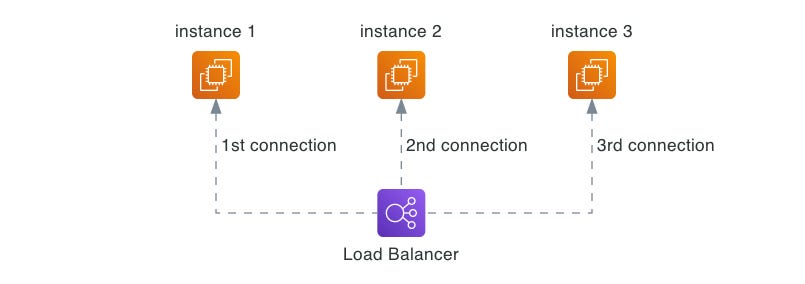

Round Robin: This is one of the simplest methods. In Round Robin, the load balancer cycles through a list of servers and sends individual requests to each in turn. When it reaches the end of the list, it starts over at the beginning.

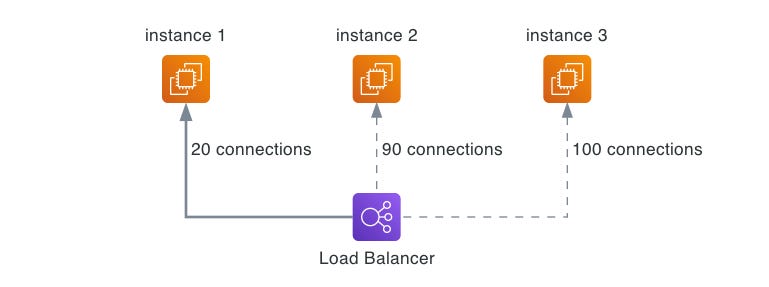

Least Connections: This method directs traffic to the server with the fewest active connections. This is especially useful when there can be significant variance in the amount of time it takes to service requests.

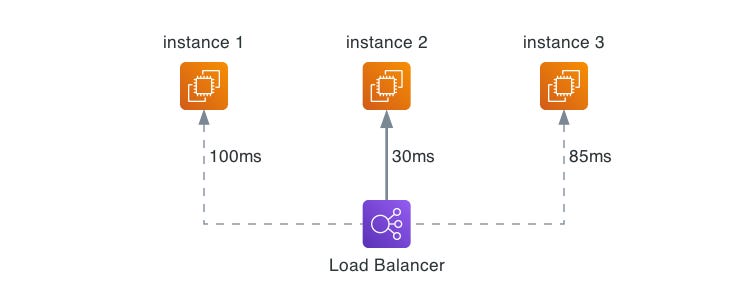

Least Response Time: The load balancer sends requests to the server that has the fewest active connections and the lowest average response time.

IP Hash: The load balancer uses the client's IP address to determine which server receives the request. This can be useful to maintain user session consistency in situations where the session data is not shared between all servers.

URL Hash: The load balancer directs requests based on the request URL. All requests for a particular URL will be directed to the same server as long as no servers are added or removed.

Weighted Distribution: In this method, each server is assigned a weight based on its processing capacity. The load balancer uses these weights to distribute requests more heavily towards servers with higher capacity.

Source IP Affinity (or "sticky sessions"): The load balancer uses the IP address of the client to map them to a server. All of the client's requests are directed to the same server unless it becomes unavailable.

Maglev: Load balancing algorithm developed by Google, employing consistent hashing to distribute client requests across servers, using unique client identifiers like IP addresses. It maintains high flow affinity, directing the same client to the same server across sessions, provided the server is available. If servers go offline, it dynamically updates a lookup table to redistribute traffic among the remaining servers, ensuring efficient load balancing, consistent user experience, and effective failover management.

Types of Load Balancing

Load balancing also operates at different layers of the network protocol stack, namely the transport layer (Layer 4 or L4) and the application layer (Layer 7 or L7).

L4 - Transport Layer Load Balancing: At Layer 4, the transport layer, load balancers distribute traffic based on network-level information. This information includes source and destination IP addresses, as well as the TCP or UDP ports. The load balancer makes its routing decisions by considering the IP packet header, but it does not inspect the packet content itself. It's essentially unaware of the specifics of the application data it's handling. An L4 load balancer operates at a lower level of the network stack, making it a high-speed, high-volume traffic management solution. This makes it perfect for heavy traffic and applications where you want to avoid any processing overhead. It's all about speed and volume at this layer, allowing vast amounts of data to be routed quickly and efficiently.

L7 - Application Layer Load Balancing: Unlike its L4 counterpart, an L7 load balancer routes traffic based on content-related factors. These factors could include HTTP header information, cookies, or other application-specific parameters.An L7 load balancer inspects the content of the message and makes a routing decision based on that content. This could be anything from what kind of client device is making the request, to what type of content the client is requesting, to the specific application protocol of the message (like HTTP for web traffic). This allows for much more intelligent and complex distribution of traffic, enabling optimization at the application level, and facilitating more advanced features like content switching, SSL offloading, and request rewriting.

There are many load balancing solutions available, both software and hardware-based. Hardware load balancers are dedicated devices that are specifically designed for the task of load balancing, while software load balancers are applications that run on standard server hardware.

Hardware load balancers usually offer higher performance and reliability due to their specialized hardware, but they can be more expensive than software solutions. Software load balancers, on the other hand, are typically more flexible and easier to manage, but may not offer the same level of performance or reliability as hardware-based solutions.

Let’s Build! A Simple Load Balancer with Golang

In order to illustrate how software load balancer can be implemented, we will build a simple load balancer in the Go programming language.

Before diving into the implementation, let's define our requirements:

The load balancer should handle multiple incoming connections concurrently.

The load balancer should distribute the load to the least loaded server using the Least Connection algorithm.

The load balancer config should be stored in a separate file

The load balancer needs to implement healthchecks and only route requests to healthy backend servers

First, let's start with defining config.json, where we define the basic configuration for our load balancer:

{

"listenPort": ":8080",

"healthCheckInterval": "5s",

"servers": [

"http://localhost:8081",

"http://localhost:8082",

"http://localhost:8083"

]

}

Now let's define two Go structs, Server and Config, to represent a server and a configuration respectively:

type Server struct {

URL *url.URL // URL of the backend server.

ActiveConnections int // Count of active connections

Mutex sync.Mutex // A mutex for safe concurrency

Healthy bool

}

type Config struct {

HealthCheckInterval string `json:"healthCheckInterval"`

Servers []string `json:"servers"`

ListenPort string `json:"listenPort"`

}Note: we use mutexes to prevent race conditions, which can occur when multiple goroutines access and manipulate the same data concurrently (in our case it's ActiveConnections count).

Next, we define a loadConfig function that reads a JSON configuration file and returns a Config instance:

func loadConfig(file string) (Config, error) {

var config Config

bytes, err := ioutil.ReadFile(file)

if err != nil {

return config, err

}

err = json.Unmarshal(bytes, &config)

if err != nil {

return config, err

}

return config, nil

}This function simply reads the file, unmarshals the JSON content into a Config instance, and returns it.

Our load balancer uses the Least Connection algorithm, which selects the server with the least active connections. Here is how we implement it:

func nextServerLeastActive(servers []*Server) *Server {

leastActiveConnections := -1

leastActiveServer := servers[0]

for _, server := range servers {

server.Mutex.Lock()

if (server.ActiveConnections < leastActiveConnections || leastActiveConnections == -1) && server.Healthy {

leastActiveConnections = server.ActiveConnections

leastActiveServer = server

}

server.Mutex.Unlock()

}

return leastActiveServer

}

This function iterates through all servers, and for each server, it checks the number of active connections. If a server has fewer connections than the current least and is marked as healthy, we select it as the new target.

The Server struct needs to have a method Proxy that returns a reverse proxy instance configured to forward requests to the backend server:

func (s *Server) Proxy() *httputil.ReverseProxy {

return httputil.NewSingleHostReverseProxy(s.URL)

}The main function is where it all comes together. It begins by loading the configuration from config.json. Then, it initializes an array of Server instances from the list of server URLs in the configuration. Each server starts a goroutine that periodically checks the server's health by making an HTTP GET request to it.

Next, it sets up an HTTP handler function that selects the least loaded server for each incoming request, forwards the request to that server, and updates the number of active connections.

Finally, it starts an HTTP server at the configured listening port:

func main() {

config, err := loadConfig("config.json")

if err != nil {

log.Fatalf("Error loading configuration: %s", err.Error())

}

healthCheckInterval, err := time.ParseDuration(config.HealthCheckInterval)

if err != nil {

log.Fatalf("Invalid health check interval: %s", err.Error())

}

var servers []*Server

for _, serverUrl := range config.Servers {

u, _ := url.Parse(serverUrl)

servers = append(servers, &Server{URL: u})

}

for _, server := range servers {

go func(s *Server) {

for range time.Tick(healthCheckInterval) {

res, err := http.Get(s.URL.String())

if err != nil || res.StatusCode >= 500 {

s.Healthy = false

} else {

s.Healthy = true

}

}

}(server)

}

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

server := nextServerLeastActive(servers)

server.Mutex.Lock()

server.ActiveConnections++

server.Mutex.Unlock()

server.Proxy().ServeHTTP(w, r)

server.Mutex.Lock()

server.ActiveConnections--

server.Mutex.Unlock()

})

log.Println("Starting server on port", config.ListenPort)

err = http.ListenAndServe(config.ListenPort, nil)

if err != nil {

log.Fatalf("Error starting server: %s\n", err)

}

}And voila! You have just built a health-aware load balancer in Go, which uses "least connection" algorithm to balance the incoming connections.

Conclusion

Load balancing is an essential tool in our toolbox for building scalable and reliable distributed systems. With various load balancing algorithms at our disposal, such as Round Robin, Least Connections, IP Hash, and others, we can design a system that effectively spreads the load across multiple servers and ensures a smooth and uninterrupted service, even under heavy traffic.

In the hands-on portion of the blog post, we've implemented a simple Least Connection load balancer using Go. Hope this practical exercise shed some light on the mechanics of load balancing and was useful in case you decide to implement your own.

And as always, subscribe to our newsletter and stay tuned to our blog for the similar deep dives.