Profiling ArgoCD with Pprof

One of the things I love about engineering is the endless puzzles it throws our way. I can easily be carried away by a single unexpected performance degradation of a service, because it's so fun!

Recently we experienced one of these cases with ArgoCD, a continuous delivery tool for Kubernetes clusters.

Problem

As a part of the ArgoCD adoption we deployed it to the huge Kubernetes cluster, with ~5,000 physical nodes and hundreds of thousands of resources. Upon using it we noticed that ArgoCD status updates and reconciliation performance were noticeably slower than on smaller clusters.

It was visible on the metrics as well, internal workqueue was always high:

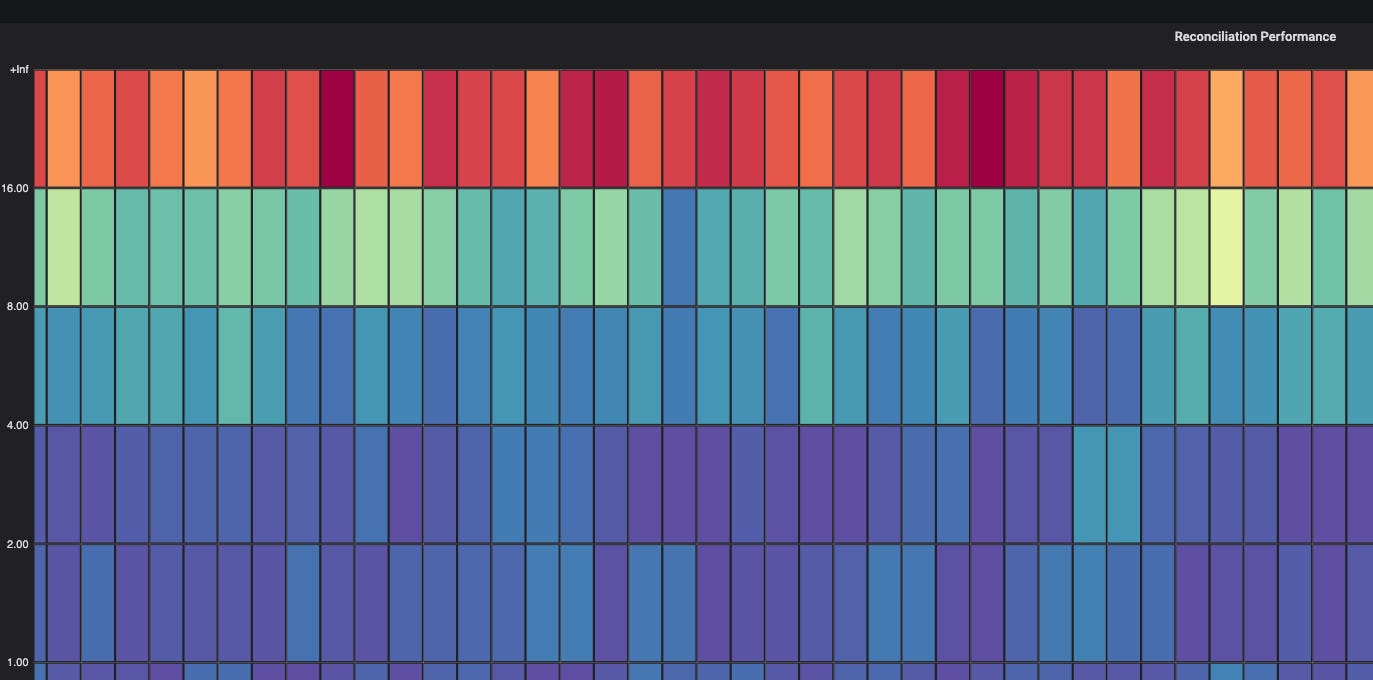

The reconciliation performance heatmap showed consistently high reconciliation times for all of our applications:

Upon closer look we figured out that the actual resource sync goes pretty fast, but the status updates in the UI and in the CRD was taking minutes to complete. Based on the code it's done by using so-called "status processors", like so

for i := 0; i < statusProcessors; i++ {

go wait.Until(func() {

for ctrl.processAppRefreshQueueItem() {

}

}, time.Second, ctx.Done())

}This would be an important detail later.

Fix Attempt #1

As a first attempt we relied on community knowledge to configure ArgoCD for larger scale. Some great material you can find here and here. Things we tried:

Bumped

controller.status.processorsandcontroller.operation.processorsto recommended higher valuesBumped controller/repo-server/server resource requests/limits to the point where it no longer was throttled or OOMed

Bumped clientQPS and burstQPS

Bumped repo-server/server replicas

We use one argocd instance per cluster, so we left sharding capabilities untouched, because it would have no impact on our setup. Unfortunately nothing from the list above helped and arguably made things slightly worse 🤔

Let's look closer

If you get stuck with anything performance related in golang, pprof is your best friend. We discussed it in details in our Debug Golang Memory Leaks newsletter issue some time ago - how to run it, how to read flamegraphs, available profiling data, etc. Check it out if you need a refresher.

ArgoCD has pprof endpoint enabled by default, which is convenient (but make sure to not expose it to untrusted networks, as your infosec team might knock on your door)

First I wanted to look at why it uses ~4 CPU cores and ~8 Gbs of memory to operate. Depending on how your argocd is setup, you should be able to do something like this to get access to the CPU profiling information.

$ kubectl port-forward pod/argocd-application-controller-0 8082 -n argocd

$ go tool pprof -http=localhost:8080 localhost:8082/debug/pprof/profile?seconds=5It will open the web UI and by navigating to the flamegraphs I found this

This flamegraph basically shows that most of the CPU cycles spent in processAppRefreshQueueItem, which is exactly the functionality we see lagging. Looking further down the stack we can see that CPU is wasted on iterating through the hierarchy of resources that are located in ArgoCD cache implementation.

Fix Attempt #2

Looking at the code we can see that controller builds a cache of all the resources that are in the cluster and maintains the "internal view" of a cluster in order to be able to accurately compare the state of resources in the cluster with the desired state in Git. That comes with the caveat - even though you might have a handful of applications and couple hundreds resources in them, ArgoCD will cache all resources that exist in your cluster, even if it won't ever use them. To confirm this we can look into the "Resource Objects Count" in cache:

As you can see it has ~370k resources and ArgoCD spends a lot of time processing through these, so it can explain the slow processing, high CPU and high memory.

So what if we reduce the amount of resources ArgoCD keeps in cache by removing things we don't need? Then the status update performance and resource utilization should improve.

You can configure resource exclusion like so:

resource.exclusions: |

- apiGroups:

- autoscaling.k8s.io

kinds:

- '*'

clusters:

- '*'

- apiGroups:

- discovery.k8s.io

kinds:

- EndpointSlice

clusters:

- '*'

...After doing the above and excluding resources we don't care about, here are the results:

The amount of resources in cache reduced 3x to ~100k

Memory usage reduced 5x

Avg CPU usage reduced by 2x

Reconciliation speed improved, but only slightly, maybe by 10% 😢

We were back to square one

Let's dig more

Another useful feature pprof has is ability to take goroutine dumps. You can do so by querying the pprof endpoint like so:

# full goroutine dump

$ curl 'localhost:8082/debug/pprof/goroutine?debug=2'

# "grouped" view

$ curl 'localhost:8082/debug/pprof/goroutine?debug=2'I was looking for processAppRefreshQueueItem to understand where it gets stuck.

The goroutine dump would look something like so for this call:

# 0x46dba4 sync.runtime_SemacquireRWMutexR+0x24 /usr/local/go/src/runtime/sema.go:82

# 0x1eec619 sync.(*RWMutex).RLock+0x99 /usr/local/go/src/sync/rwmutex.go:71

# 0x1eec5f4 github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).IterateHierarchy+0x74 /go/pkg/mod/github.com/argoproj/gitops-engine@v0.7.1-0.20240122213038-792124280fcc/pkg/cache/cluster.go:972

# 0x2259aa4 github.com/argoproj/argo-cd/v2/controller/cache.(*liveStateCache).IterateHierarchy+0xc4 /go/src/github.com/argoproj/argo-cd/controller/cache/cache.go:604

# 0x22c43c2 github.com/argoproj/argo-cd/v2/controller.(*ApplicationController).getResourceTree+0x782 /go/src/github.com/argoproj/argo-cd/controller/appcontroller.go:535

# 0x22c3675 github.com/argoproj/argo-cd/v2/controller.(*ApplicationController).setAppManagedResources+0x95 /go/src/github.com/argoproj/argo-cd/controller/appcontroller.go:455

# 0x22d04b6 github.com/argoproj/argo-cd/v2/controller.(*ApplicationController).processAppRefreshQueueItem+0x14b6 /go/src/github.com/argoproj/argo-cd/controller/appcontroller.go:1596

# 0x22c83c4 github.com/argoproj/argo-cd/v2/controller.(*ApplicationController).Run.func3+0x24 /go/src/github.com/argoproj/argo-cd/controller/appcontroller.go:819

# 0x12fda52 k8s.io/apimachinery/pkg/util/wait.BackoffUntil.func1+0x32 /go/pkg/mod/k8s.io/apimachinery@v0.26.11/pkg/util/wait/wait.go:157

# 0x12fd90e k8s.io/apimachinery/pkg/util/wait.BackoffUntil+0xae /go/pkg/mod/k8s.io/apimachinery@v0.26.11/pkg/util/wait/wait.go:158

# 0x12fd7fe k8s.io/apimachinery/pkg/util/wait.JitterUntil+0x7e /go/pkg/mod/k8s.io/apimachinery@v0.26.11/pkg/util/wait/wait.go:135

# 0x12fd73d k8s.io/apimachinery/pkg/util/wait.Until+0x1d /go/pkg/mod/k8s.io/apimachinery@v0.26.11/pkg/util/wait/wait.go:92As you can see this goroutine is stuck waiting on sync.(*RWMutex).RLock at the top of the stack.

By taking look at grouped view the problem I had 💡 moment: 49/50 status processors were waiting on the lock, so it was a lock contention problem:

24 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).IterateHierarchy14 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).GetAPIResources6 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).GetManagedLiveObjs5 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).FindResources1 active on

github.com/argoproj/gitops-engine/pkg/cache.(*Resource).iterateChildren

Unfortunately in golang there is no easy way to understand who is holding the lock, but going through the code it's likely related to the watch, which updates the cache on k8s resources updates. In large clusters, where resource updates occur frequently, this lock contention could explain the performance issues.

Fix Attempt #3

We were running ArgoCD 2.5 and I found two issues related to locks (1 and 2). The first one was to likely improve the lock contention cause it removes unnecessary lock from GetAPIResources call, which we saw occupying significant amount of goroutines.

So we upgraded to ArgoCD 2.10 and confirmed that GetAPIResources no longer waits for a lock 🚀

The reconcile speed improved... somewhat 😔. The workqueue visibly reduced on the graphs during low pod churn periods, but still took minutes when a lot of pods being scheduled.

Taking a fresh goroutine dump:

36 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).IterateHierarchy10 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).GetManagedLiveObjs3 goroutines blocked on

github.com/argoproj/gitops-engine/pkg/cache.(*clusterCache).FindResources1 active on

github.com/argoproj/gitops-engine/pkg/cache.(*Resource).iterateChildren

So the lock contention was just skewed towards IterateHierarchy call. Ugh!

Next Steps

So after trying all of the above solutions we are down to two options at this point, which were a last resort but will address the performance issues we were seeing

Significantly bump reconciliation timeout from 45s to 5 minutes or more. This will reduce the workqueue size, since applications would be time queued less often

Filter out resource updates not related to the "change in desired state of application". By default, an ArgoCD application is refreshed every time a resource that belongs to it changes. So if we filter out not-so-important, but frequently updated fields like

/statuswe can also reduce the workqueue size and improve speed.

In conclusion, troubleshooting performance issues in complex systems can be challenging, but with the right tools and approach, it can also be a rewarding learning experience. Hope you enjoyed this deep dive! 🕵️