Why Distributed Systems Fail? (part 1)

Distributed systems are tricky - it's easy to make wrong assumptions that lead to problems down the road. Back in the 90s, computer scientist L. Peter Deutsch identified several common misconceptions, or "fallacies," that trip up engineers working on distributed systems. Surprisingly these fallacies are still relevant today:

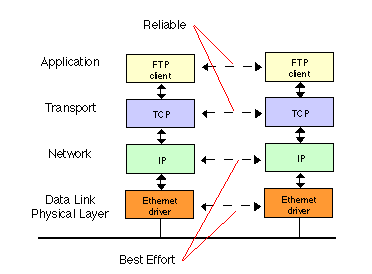

The Network is Reliable: It's risky to assume networks are 100% reliable. Networks can and do fail in various ways.

Latency is Zero: While we might wish our networks had no latency, that's simply not physically possible - even light takes time to travel distances. Ignoring the inevitable delay in data transmission can lead to unrealistic expectations of system performance.

Bandwidth is Infinite: This overlooks the physical and practical limitations on data transfer rates.

The Network is Secure: No wonder Security is a growing industry. Assuming inherent security can lead to vulnerabilities and oversight in protective measures.

Topology Doesn't Change: This neglects the dynamic nature of network configurations.

There is One Administrator: A simplification that fails to consider the complexity of managing distributed systems.

Transport Cost is Zero: Overlooking the resources required for data movement.

The Network is Homogeneous: Ignoring the diversity in network systems and standards.

These fallacies, if not recognized and addressed, can lead to design flaws, performance issues, and security vulnerabilities in distributed systems. In the following sections, we will break down each of these misconceptions, exploring their implications and how to mitigate the risks they pose in real-world applications.

Fallacy 1: The Network is Reliable

The belief that 'The Network is Reliable' is one of the most common and risky assumptions in the field of distributed computing. This fallacy leads to an underestimation of the likelihood and impact of network failures. In reality, networks are susceptible to a range of issues, from temporary outages and packet loss to more severe disruptions caused by hardware failures, software bugs, or external factors like natural disasters.

Implications:

System Downtime: Relying on a flawless network can lead to significant system downtime when inevitable failures occur.

Data Loss or Corruption: Without robust handling of network issues, data may be lost or corrupted during transmission.

Security Vulnerabilities: Assuming network reliability might lead to neglecting necessary security protocols, potentially exposing the system to attacks.

Poor User Experience: Applications that don't account for network unreliability can frustrate users with inconsistent performance.

Mitigation Strategies:

Redundancy: Implement redundant network paths and failover mechanisms to maintain system functionality during network failures.

Retries and Timeouts: Incorporate intelligent retry mechanisms with exponential backoff and sensible timeout settings to handle transient network issues.

Acknowledgment Mechanisms: Use acknowledgment messages to confirm the successful reception of data.

Monitoring and Alerting: Establish comprehensive monitoring and alert systems to detect and respond to network issues promptly.

Load Balancing: Utilize load balancers to distribute traffic evenly across the network, preventing overload on any single point.

Caching: Employ caching strategies to provide users with immediate access to data during short network outages.

Data Validation and Correction: Implement checksums and error correction protocols to ensure data integrity.

Graceful Degradation: Design systems to degrade functionality gracefully in the face of network issues, maintaining a level of service where possible.

By acknowledging and preparing for the inherent unreliability of networks, distributed systems can be made more robust, resilient, and user-friendly.

Fallacy 2: Latency is Zero

The assumption that 'Latency is Zero' is a common oversight in distributed systems. This fallacy ignores the time taken for data to travel across the network. In reality, latency is affected by a multitude of factors, including physical distance, network bandwidth, router hops, and the quality of connections. Even in high-speed networks, latency can never be completely eliminated.

Implications:

Performance Issues: Applications designed without considering latency can suffer from poor performance, especially in scenarios requiring real-time data processing.

User Experience Degradation: Interactive applications, such as online gaming or video conferencing, can become frustratingly slow, impacting user satisfaction.

Inaccurate System Synchronization: Time-sensitive operations may fail or produce erroneous results due to unexpected delays.

Inefficient Resource Utilization: Overlooking latency can lead to suboptimal resource allocation, as systems might wait unnecessarily for responses.

Mitigation Strategies:

Geographic Distribution: Place servers and data centers closer to the end-users to minimize physical distance and, consequently, network latency.

Optimization of Protocols: Use efficient communication protocols that minimize overhead and are optimized for the specific use case.

Asynchronous Communication: Implement asynchronous operations to prevent systems from stalling while waiting for responses.

Caching Strategies: Employ caching closer to the user to reduce the need for frequent long-distance data retrieval.

Load Balancing: Utilize smart load balancing that considers geographic location and current network latency.

Predictive Fetching: Anticipate user needs and prefetch data to minimize perceived latency.

Performance Testing: Regularly test the system under realistic network conditions to understand and optimize for latency impacts.

User Interface Design: Design UIs to give immediate feedback to the user, masking underlying network latency.

Acknowledging and accounting for network latency is crucial in designing responsive, efficient, and user-friendly distributed systems. By implementing these strategies, engineers can significantly mitigate the impact of latency on system performance and user experience.

Fallacy 3: Bandwidth is Infinite

This one leads to overlooking the limitations in data transmission capacity of networks. This misconception can result in designing systems that expect high data throughput regardless of the actual network capabilities. In reality, bandwidth is a limited resource, influenced by network infrastructure, traffic congestion, and the capabilities of end-user devices.

Implications:

Bottlenecks and Slowdowns: Systems designed without bandwidth constraints in mind can suffer from bottlenecks, leading to significant slowdowns.

Inefficient Data Handling: Overestimating bandwidth can result in sending large amounts of data unnecessarily, wasting network resources and impacting other operations.

Poor Scalability: Systems that don't account for bandwidth limitations may face challenges in scaling up to accommodate more users or data.

User Experience Issues: Users on networks with limited bandwidth might experience delays, interruptions, or even service unavailability.

Mitigation Strategies:

Data Optimization: Compress data to reduce size and optimize formats for efficient transmission.

Adaptive Techniques: Implement adaptive streaming and data transfer techniques that adjust to available bandwidth.

Load Balancing: Use load balancing to distribute network traffic efficiently across available paths.

Bandwidth Throttling: Intelligently throttle bandwidth usage to avoid overwhelming network capacity.

Prioritization of Traffic: Prioritize critical data traffic to ensure essential services remain unaffected during high load.

Monitoring and Analytics: Continuously monitor network performance and use analytics to understand and manage bandwidth usage.

Scalable Architecture: Design a system architecture that can gracefully adapt to varying bandwidth conditions.

By considering the finite nature of bandwidth and implementing these strategies, distributed systems can be designed to be more efficient, reliable, and user-friendly, even under varying network conditions.

Fallacy 4: The Network is Secure

This fallacy leads to complacency in security measures, overlooking the myriad of threats present in network environments. Network security is not inherent but requires deliberate and continuous effort. Threats can arise from various sources, including external attacks, internal vulnerabilities, and even inadvertent user actions.

Implications:

Vulnerability to Attacks: Unsecured networks are prone to various types of cyberattacks like hacking, phishing, and DDoS attacks.

Data Breaches: Insufficient network security can lead to unauthorized access and theft of sensitive data.

Compliance Issues: Neglecting network security can result in non-compliance with regulatory standards, leading to legal and financial repercussions.

Loss of Trust: Security incidents can damage the reputation of an organization and erode user trust.

Mitigation Strategies:

Encryption: Use strong encryption for data in transit and at rest to protect against eavesdropping and data breaches.

Authentication and Authorization: Implement robust authentication mechanisms and ensure strict authorization controls to regulate access.

Regular Security Audits: Conduct regular security audits and vulnerability assessments to identify and rectify potential weaknesses.

Firewalls and Intrusion Detection Systems: Deploy firewalls and intrusion detection systems to monitor and protect network traffic.

Security Training: Educate employees and users about security best practices and the importance of safeguarding network resources.

Up-to-date Software: Keep all network software, including operating systems and applications, updated to patch known vulnerabilities.

Network Segmentation: Segment the network to contain breaches and limit access to sensitive areas.

Incident Response Plan: Develop and maintain an incident response plan to quickly and effectively address security breaches.

Acknowledging the inherent insecurity of networks and implementing these strategies is essential for maintaining the confidentiality, integrity, and availability of distributed systems and their data.

In this blog post, we've explored the first four of the eight classic fallacies of distributed computing.

Subscribe to not miss the next part, where we'll continue to explain these concepts and provide insights into building more resilient distributed architectures.