Retries, Backoff and Jitter

💡 In one of the previous posts we introduced Eight Pillars of Fault-tolerant Systems and today we will discuss "Retries".

In distributed systems, failures and latency issues are inevitable. Services can fail due to overloaded servers, network issues, bugs, and various other factors. As engineers building distributed systems, we need strategies to make our services robust and resilient in the face of such failures. One useful technique is using retries.

Understanding Retries

At a basic level, a retry simply involves attempting an operation again after a failure. This helps mask transient failures from the end user. Together with fail-fast pattern, retries are essential for distributed systems where partial failures and temporary blips happen frequently. Without retries, these minor glitches affect user experience and will result in lost availability.

Scenarios Where Retries Are Beneficial

Transient Failures - These are short-lived blips in availability, performance, or consistency. Common causes include network congestion, load spikes, database connection issues, brief resource bottlenecks. A retry can allow the request to successfully pass through once the disruption has cleared.

Partial Failures - In large distributed systems, it's common for a percentage of requests to fail at any given time due to nodes going down, network partitions, software bugs, and various edge cases. Retries help smooth over these intermittent inconsistencies and exceptions. The retry logic hides the partial failure from the end user so the system keeps running reliably.

In both cases, requests are given multiple chances to succeed before surfacing the failure to the user or a client. Retries transform an unreliable system into one that performs consistently.

Challenges with Retries

While retries are very useful, they also come with some risks and challenges that must be mitigated:

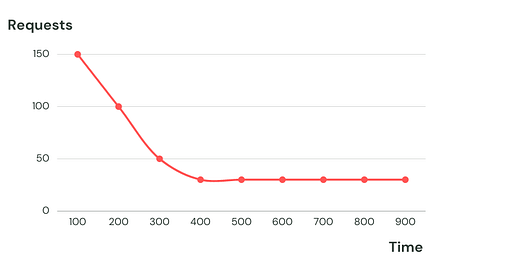

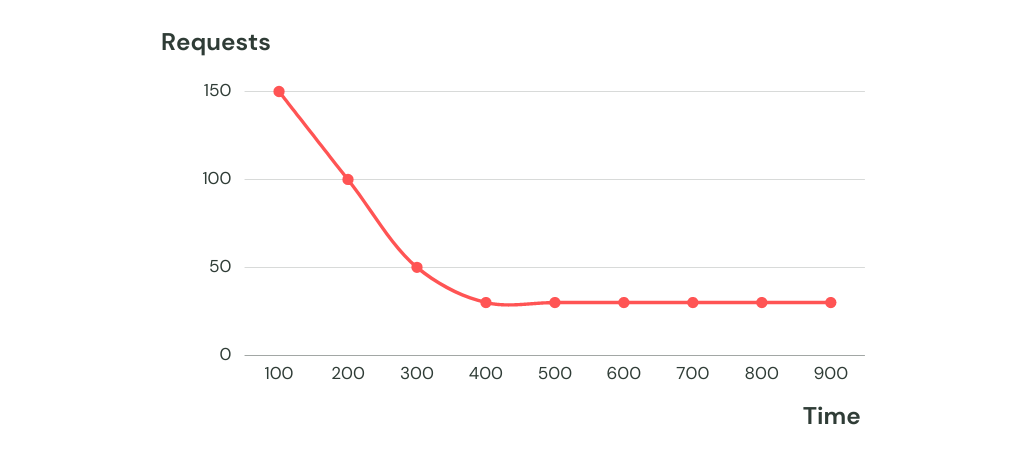

Load Amplification - if a system is already struggling with high load or is completely down, retries can amplify problems by slamming the system with additional requests. This overburdening can lengthen the outage or trigger cascading failures.

Solution: implement exponential backoff between retries to progressively increase wait time. Limit the total number of retry attempts. Use a circuit breaker to stop retries when error thresholds are exceeded. This prevents overloading the already struggling system.

Side Effects - some operations like creating a resource or transferring money have real world "side effects". Repeatedly retrying these can unintentionally duplicate outcomes like duplicate charges or duplicate records.

Solution: design interfaces and systems to be idempotent whenever possible. Idempotent operations can be safely retried. For non-idempotent operations, make the requests uniquely identifiable to filter duplicates or fail them right away.

Fairness & Capacity - retries aren't cost-free, as each one compete for resources alongside new incoming requests. Additionally, the lack of a centralized control over retry loads can impact downstream capacity.

Solution: implement a rate limiter on your retry load upstream. This approach helps you identify worst-case scenarios regarding downstream overloading. Moreover, it ensures that only a minor percentage of retry requests compete with new requests, as opposed to an unbounded percentage of retry requests.

Synchronized Retries - if multiple clients time out at the same time and retry simultaneously, it can create a "retry storm" that overloads the system. This thundering herd problem can be worse than the original issue.

Solution: add jitter to randomize the wait times before retrying. This avoids synchronized spikes in traffic. Limit number of retries and use circuit breakers to prevent overloading the system.

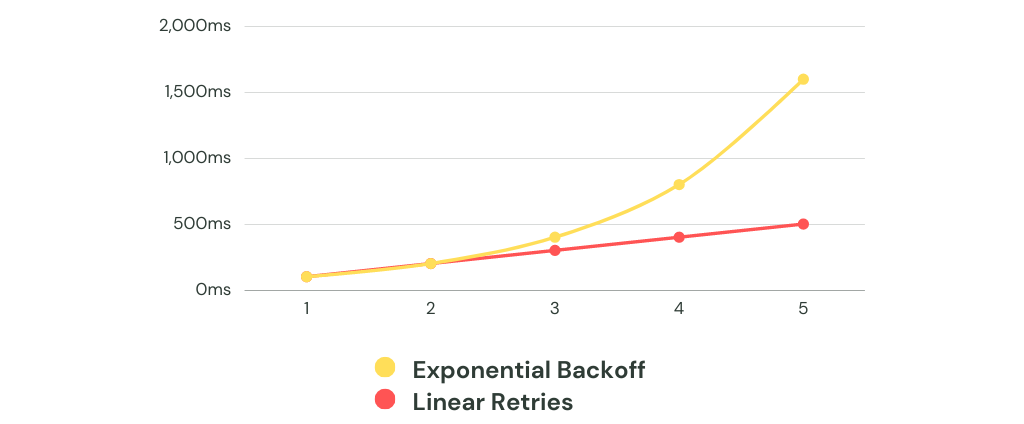

Exponential backoff

Exponential backoff is a retry strategy where the delay between retries increases exponentially. For example:

The increasing waits help prevent hammering an already struggling system with constant rapid retries. The progressively longer delays give the backend services time to recover from disruptions.

Here is an example implementation in Go: