The Art of Building Fault-Tolerant Software Systems

We live in a world driven by software systems. They have weaved themselves into the fabric of our daily lives, and their continuous, reliable performance is no longer a luxury but a necessity. Businesses, now more than ever, need to ensure their systems remain available, reliable, and resilient. This necessity is fueled by the desire to satisfy customers and outpace competitors. The secret sauce to achieving this? Building fault-tolerant software systems.

Fault-tolerant systems are significant because they help avert costly downtime and lost revenue. Imagine a financial institution that heavily relies on a trading platform to execute trades. It can't afford for the platform to go offline during market hours. If it does, the company risks losing millions in revenue and damaging its reputation. However, with fault-tolerant strategies and patterns in place, the company can ensure the platform's availability even in the event of failure.

In this blog post, we'll take a closer look at some strategies and patterns big tech companies and software engineering teams utilize to stay available. Let's get started!

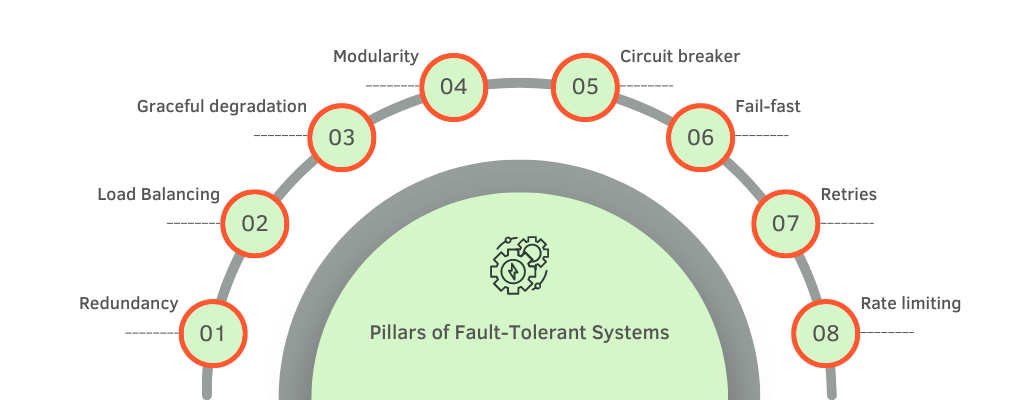

Eight Pillars of Fault-tolerant Systems

Redundancy and Replication is one of the most common strategies for building fault-tolerant software systems. Redundancy involves replicating critical components of the system and ensuring that multiple instances of these components are available. This approach ensures that if one instance of a component fails, another instance can take over. Redundancy can be achieved at different levels of the system, including hardware, software, and data. For instance, hardware redundancy involves using multiple servers or storage devices, while software redundancy involves replicating application instances across multiple servers.

Load balancing is another critical and well known strategy for building fault-tolerant software systems. Load balancing involves distributing incoming network traffic across multiple servers to ensure that no single server is overwhelmed. This approach ensures that if one server fails, traffic can be automatically redirected to another server, reducing the impact of the failure. Load balancing can be achieved using hardware or software solutions, and it is often used in conjunction with redundancy and replication to maximize the fault-tolerance of a system.

Modularity involves breaking down a system into smaller, independent components, or modules, that can be developed, deployed, and maintained independently. This approach makes it easier to identify and isolate faults, and it enables faster recovery from failures. Microservices take modularity a step further by breaking down a system into even smaller, independent services that can be developed and deployed independently. This approach further increases fault-tolerance by minimizing the impact of failures and enabling rapid recovery.

Graceful degradation is about designing a system to continue functioning—at least at a basic level—even if some components fail. This approach ensures that the system remains available and usable, even if some features or functions are temporarily unavailable. Graceful degradation can be achieved by designing the system to detect failures and automatically adjust its behavior to compensate for them. For example, a web application might display a simplified version of a page if a feature that relies on a third-party service is unavailable.

Circuit breaker is a design pattern that can be used to prevent cascading failures in a system. It involves wrapping calls to external dependencies, such as a database or web service, in a circuit breaker. The circuit breaker monitors the health of the external dependency, and if it detects a failure, it opens the circuit, preventing further calls to the dependency. This approach allows the system to gracefully degrade, rather than crashing, in the event of an external dependency failure.

Fail-fast is a pattern that involves detecting failures as early as possible and stopping the execution of the system to prevent further damage. This approach ensures that the system fails quickly and prevents cascading failures that can be more difficult to recover from. Fail-fast can be achieved by adding assertions or preconditions to code to detect errors early in the development process. Setting proper timeouts & deadlines can be used as a form of fail-fast, where the system terminates an operation that takes too long to complete, preventing further damage to the system.

Retries involve automatically retrying an operation that has failed, in the hope that it will succeed on a subsequent attempt. This approach can be effective for transient failures, such as network timeouts or temporary service unavailability. Retries can be implemented using different algorithms, such as exponential backoff, which increases the delay between each retry to reduce the load on the system.

Rate limiting is a strategy that involves limiting the rate at which a system processes incoming requests. This approach prevents overload and ensures that the system can handle bursts of traffic without becoming overwhelmed. Rate limiting can be achieved by setting limits on the number of requests that can be processed per second or per minute. This strategy is particularly useful for systems that rely on external APIs or services that have usage limits.

Final Thoughts

This is not an exhaustive list of techniques and approaches that can be used to increase system reliability and availability. However, the patterns mentioned above provide a good starting point for developers looking to improve the resilience of their software systems.

Subscribe to our newsletter and stay tuned to our blog for the upcoming deep dive into each of these strategies (with code snippets!), where we'll further demystify the art of constructing robust, resilient, and truly fault-tolerant software systems.