What Makes a Service Observable?

Observability is a crucial principle for operating reliable and resilient systems. As an experienced SRE, we often get asked what makes a service truly observable. The ability to understand what is happening in a complex distributed system is critical for detecting and diagnosing issues quickly. However, achieving observability is easier said than done. In this post, we will share our perspective on the key elements that contribute to strong observability.

What exactly makes a service observable? What are the foundational practices and tools required to gain insight into the inner workings of modern applications?

Metrics and Logging

The foundation of observability is comprehensive instrumentation of your services to output relevant metrics and logs. Metrics tell the story of your system's overall health and performance. Logs provide insight into discrete events and trace execution flow.

Start by identifying key business and system metrics that indicate the core functionality and health of your services. These may include request rates, latency, error rates, saturation, etc. Instrument your services to emit metrics to a centralized platform like Prometheus or Datadog.

Logs should capture event details, error conditions, and workflow steps. Send logs to a system like the ELK stack, Splunk, or a similar product. Standardize log formats and classify messages by severity.

Example of metrics and logs in a golang application:

This instruments a basic handler with Prometheus metrics for total requests and latency histograms. The zap-based logger logs some request details. This could be expanded on with metrics for errors, more request info, etc.

package main

import (

"net/http"

"time"

"github.com/prometheus/client_golang/prometheus"

"github.com/prometheus/client_golang/prometheus/promhttp"

"go.uber.org/zap"

)

var (

httpRequests = prometheus.NewCounterVec(

prometheus.CounterOpts{

Name: "http_requests_total",

Help: "Number of HTTP requests",

},

[]string{"path"},

)

httpDuration = prometheus.NewHistogramVec(

prometheus.HistogramOpts{

Name: "http_request_duration_seconds",

Help: "HTTP request latency distribution",

},

[]string{"path"},

)

)

func main() {

logger, _ := zap.NewProduction()

defer logger.Sync() // flushes buffer, if any

sugar := logger.Sugar()

http.Handle("/metrics", promhttp.Handler())

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

start := time.Now()

defer r.Body.Close()

httpRequests.WithLabelValues(r.URL.Path).Inc()

sugar.Infow("Handled request",

"method", r.Method,

"path", r.URL.Path,

"from", r.RemoteAddr,

)

time.Sleep(10 * time.Millisecond) // simulate work

httpDuration.WithLabelValues(r.URL.Path).Observe(time.Since(start).Seconds())

w.Write([]byte("hello world"))

})

sugar.Fatal(http.ListenAndServe(":8080", nil))

}Distributed Tracing

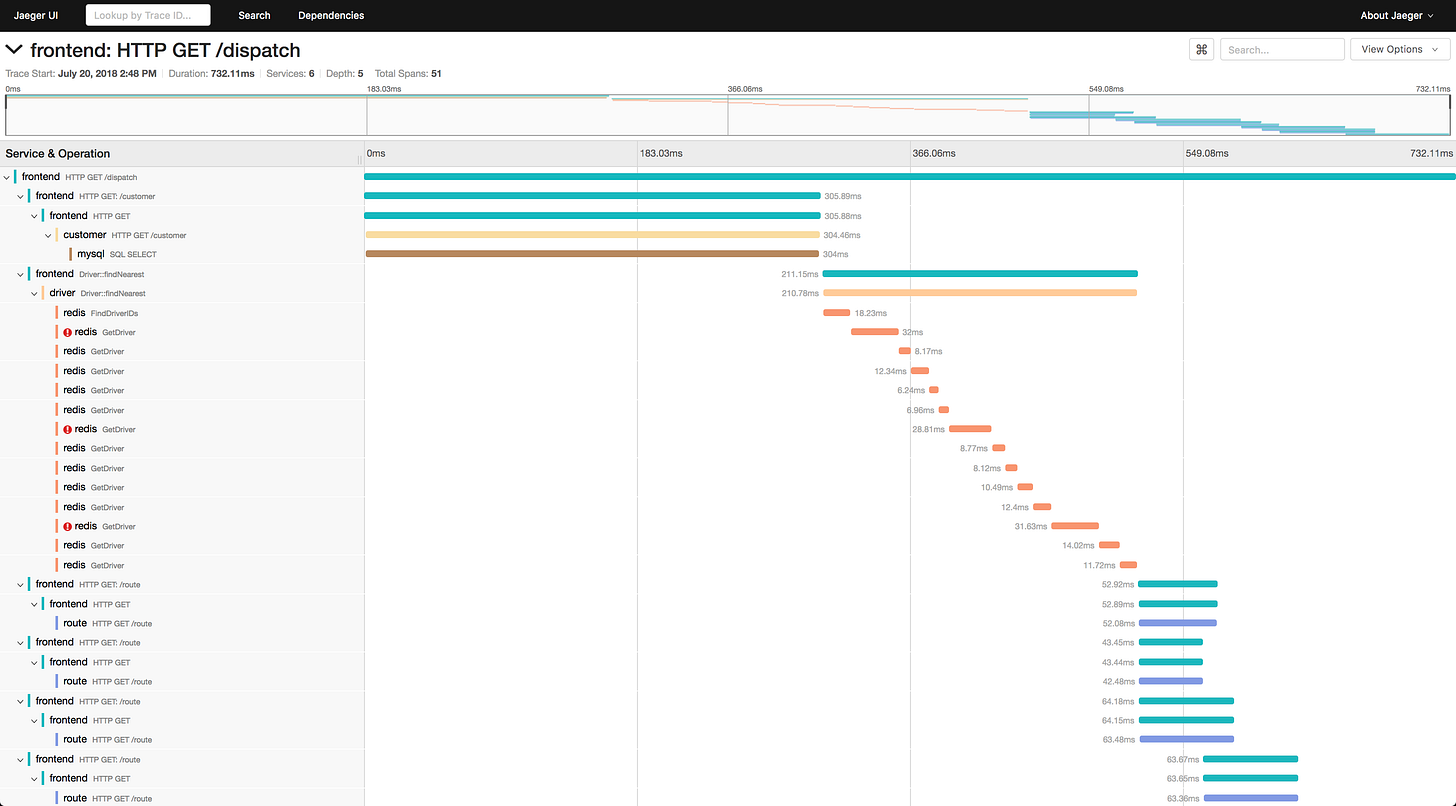

Distributed tracing ties together events across microservices so you can follow a request end-to-end. Implement tracing using tools like OpenTelemetry to assign each request a unique ID that is passed between services. View trace waterfalls to visualize timing and dependencies.

Below is a Jaeger UI tracing view:

Example of distributed tracing in a golang application:

This initializes an OpenTelemetry Tracer and propagates a context with tracing info throughout the handler. The span will be exported to Jaeger for distributed tracing visualization.

Additional spans could be added for downstream services, and other info like errors can be attached as span events.

package main

import (

"context"

"net/http"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/exporters/jaeger"

"go.opentelemetry.io/otel/propagation"

"go.opentelemetry.io/otel/trace"

)

func initTracer() {

// Create Jaeger exporter

exp, err := jaeger.New(jaeger.WithCollectorEndpoint())

if err != nil {

log.Fatal(err)

}

tp := trace.NewTracerProvider(trace.WithSyncer(exp))

otel.SetTracerProvider(tp)

}

func main() {

initTracer()

http.HandleFunc("/", handle)

http.ListenAndServe(":8080", nil)

}

func handle(w http.ResponseWriter, r *http.Request) {

ctx := r.Context()

tr := otel.Tracer("app-tracer")

ctx, span := tr.Start(ctx, "handle-request")

defer span.End()

// handle request...

span.AddEvent("Request processed")

}Service Dashboards

Bring your metrics, logs, and traces together into cohesive dashboards tailored to each service. Build dashboards that focus on the most critical health indicators and business concerns.

For example, a dashboard for an authentication service may include:

Request rate, latency, and error metrics

Login success and failure counts

Cache hit rate

CPU and memory utilization

Authentication errors in logs

Traces for sample login transactions

Make sure dashboards are user-friendly so any on-call engineer can quickly assess service state. Optimize for high information density by summarizing key metrics and highlighting anomalies. Provide drilling down capabilities to view details on demand.

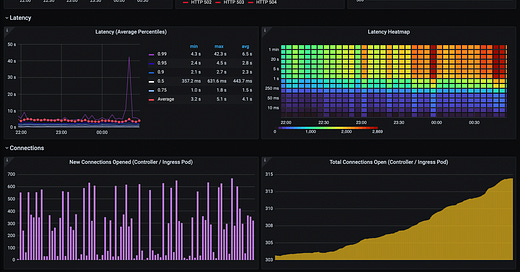

Leverage dashboarding tools like Grafana which provide visualization capabilities for time-series data. Set up templates for common charts and panels to maintain consistency between service dashboards. Automate dashboard creation using configuration as code.

Dashboarding takes your monitoring data and puts it in context to tell a story. Well-designed dashboards empower engineers to quickly diagnose issues.

Example of Nginx K8s Ingress Dashboard:

Alerting Rules

Set up alerting rules triggered by critical metrics and log events. Prioritize alerts for the most severe issues impacting users. Configure sensible thresholds and use intelligent grouping to prevent alert floods. Route alerts to appropriate responders.

Create clear, actionable alerts. Include all relevant context directly in the alert notification. Add links to impacted dashboards and debugging tools to speed investigation.

Maintain an alert runbook documenting standard responses for each alert. Cover troubleshooting steps, mitigations, and escalations. Make runbooks easily accessible so on-call staff can quickly react to alerts. Review and update runbooks regularly.

Consider your alert accuracy SLO and tune rules to minimize false positives. Invalid alerts erode trust and increase on-call burden. Balance sensitivity with error rate to alert early on real issues without excessive noise.

Effective alerting directs attention to issues requiring intervention. Well-designed alerts and runbooks empower responders to quickly restore service health.

Alert fatigue can occur if teams are overwhelmed by too many alerts, especially false positives. Make sure to measure factors like alert accuracy, false positive rate, and responder feedback to gauge and prevent excessive alert noise.

Example of a Prometheus Alertmanager configuration:

alerts:

- alert: HighRequestLatency

expr: job:request_latency_seconds:mean5m{job="myjob"} > 1

for: 5m

labels:

severity: warning

annotations:

summary: High request latency detected

runbook_url: https://internal.codereliant.io/wiki/alerts/high-latency

dashboard: https://grafana.codereliant.io/d/abcd1234/service-dashboard

info: Latency is above 1000ms for job {{ $labels.job }}

receivers:

- name: team-sre

email_configs:

- to: sre@codereliant.ioOn-Demand Debugging

Implement advanced debugging affordances to facilitate ad hoc investigation of issues:

Request Logging: Log full request and response payload details. Capture identifiers like request ID for tracing.

Event Replay: Record live traffic and allow replaying production requests for debugging.

Snapshots: Take on-demand heap, thread, and goroutine snapshots for performance profiling.

Tail Sampling: Continuously capture a subset of real-time requests for debugging.

Dynamic Instrumentation: Toggle additional metrics, logs, and traces in production on the fly.

On Demand or Continuous Profiling: Ability to get cpu and memory profiles continuously or on demand without having to know service details.

CLI Tools: Provide CLI diagnostic tools for querying current system state and configurations.

Metrics Endpoint: Expose an endpoint that provides current metrics values for ad hoc performance analysis.

Debugging Dashboard: Offer a dedicated real-time dashboard focused on debugging needs.

The goal is to enable engineers to quickly answer questions and investigate issues by providing direct access to comprehensive runtime data. On-demand debugging unlocks definitive answers.

Culture of Observability

Foster a culture that values monitoring, alerting, and debugging. Set expectations that engineers will produce quality instrumentation for their services. Empower everyone to question data and seek answers. Continuously iterate on your observability strategy.

Observability enables understanding of your complex systems. Following these best practices will set your services up for success.